Box Blur

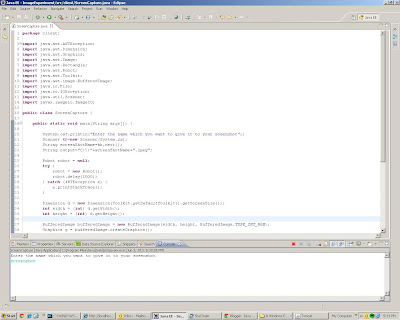

As i promised in my earlier posts that we will return to Blurring Image concepts, we are back. In this post, i will try to explain Box Blur. Box Blur is basically an image filter which gets created by taking average values of its neighboring image. Since taking average value of pixels is simple, the algorithm can be implemented quite easily. One of the major advantage of Box Blur is that if applied repeatedly it approximates to Gaussian Kernel. Original Box Blurred